Contact Us

If you're working on something real — let's talk.

Development & Integration

Blockchain Infrastructure & Tools

Ecosystem Growth & Support

© 2026 Lampros Tech. All Rights Reserved.

Published On Nov 26, 2025

Updated On Nov 26, 2025

Growth Lead

FAQs

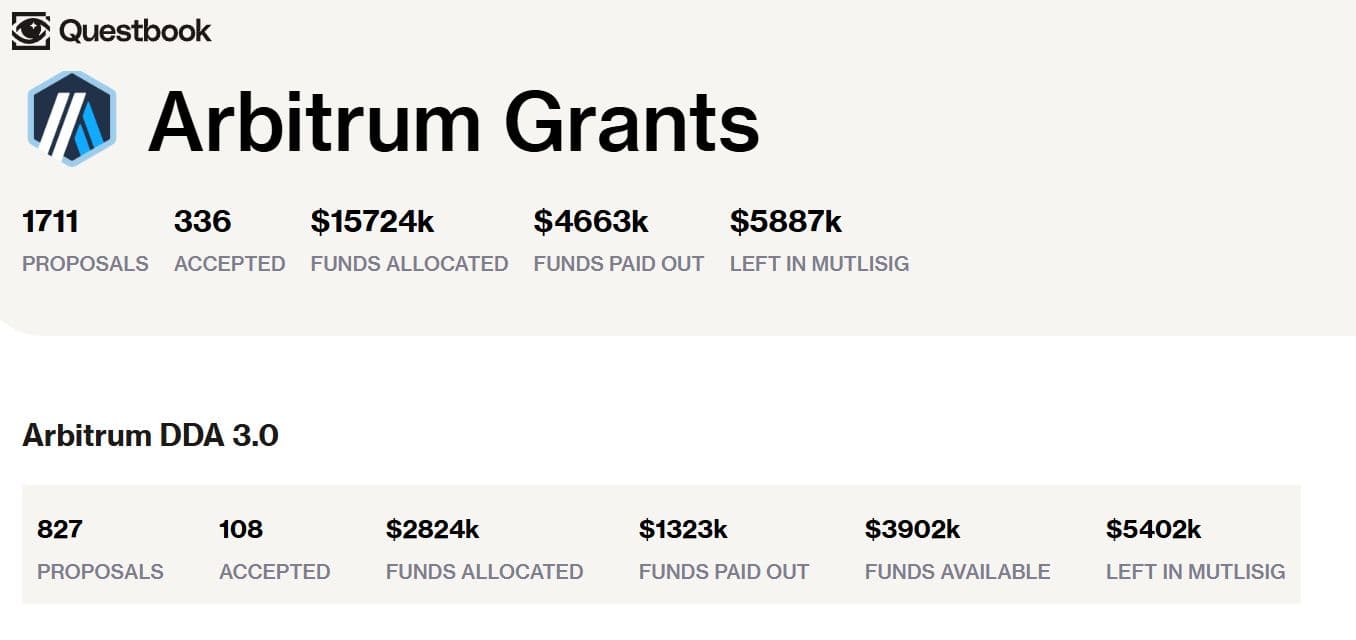

Most proposals fail because they don’t align with an ecosystem’s active priorities or domain bottlenecks. Programs like Arbitrum DDA 3.0 and Optimism Missions now fund solutions tied to measurable network needs, not broad Web3 ideas. Lack of traction, unclear milestones, or unrealistic budgets are common rejection triggers.

Successful teams map their proposal to real bottlenecks—such as Orbit observability, OP Stack reliability, or Base account abstraction. They show proof of execution through testnets, GitHub commits, and measurable KPIs. Reviewers fund evidence, not intent.

Reviewers look for ecosystem fit, clarity, and proof of delivery. They evaluate traction, domain alignment, technical realism, and measurable impact. A proposal that mirrors the ecosystem’s roadmap (like Arbitrum’s Orbit tooling or Optimism’s cross-rollup stability) scores higher.

An effective grant proposal includes:

Measurable KPIs tied to network performance Proposals that show progress before funding often outperform those starting from zero.

Lampros Tech helps teams turn execution into evidence. Our Grant Support Framework aligns proposals with what reviewers prioritize: domain fit, measurable progress, and transparency. We help structure milestones, define KPIs, and build credibility across ecosystems like Arbitrum, Optimism, Base, and Scroll.